OpenCV is the most popular library for computer vision. Originally written in C/C++, it now provides bindings for Python.

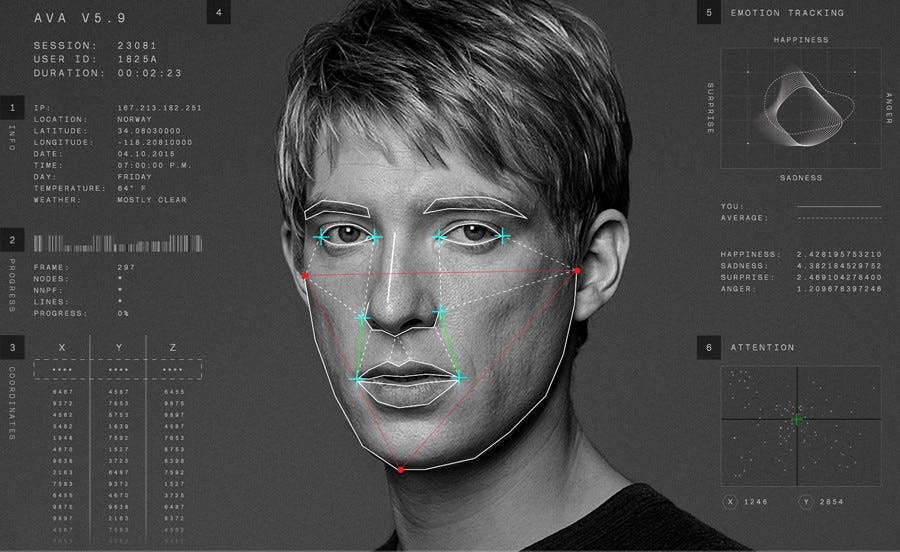

OpenCV uses machine learning algorithms to search for faces within a picture. Because faces are so complicated, there isn’t one simple test that will tell you if it found a face or not. Instead, there are thousands of small patterns and features that must be matched.

Cascades

Though the theory may sound complicated, in practice it is quite easy. The cascades themselves are just a bunch of XML files that contain OpenCV data used to detect objects. You initialize your code with the cascade you want, and then it does the work for you.

Before we jump into the process of face detection, let us learn some basics about working with OpenCV. In this section we will perform simple operations on images using OpenCV like opening images, drawing simple shapes on images and interacting with images through callbacks. This is necessary to create a foundation before we move towards the advanced stuff.

In my program, I have taken some images for training my model on the images of particular person with different orientations.

I built the program in three stages which are :

- Data Creator or generator

- Trainer

- Predictor

DATA CREATOR : This program simply taka frame by frame input from your webcam in grayscale mode of course and then simply storing it in memory with the image name containing User ID which will later help to predict that label and itself going to be a label for every picture.

face_cascade = cv2.CascadeClassifier('/home/zl05/work/haarcascades/haarcascade_frontalface_alt.xml') // Loading Cascade File

cv2.imwrite('dataset' + '/user' + '.' + str(id) + '.' + str(num) + '.jpg', roi_color)

// This is the actual line which will be generating data set of images from webcam

Here, Value of 'id' is taken from user and 'num' is the index value for images so that no two images should have same name. 'roi_color' is the region of image which is containing any face and this is colored one.

TRAINER : This program will read all the images stored by the Generator and will train the model on set of images having images of different User IDs and then this program will generate ” .yml ” file which is having training information.

recognizer = cv2.face.LBPHFaceRecognizer_create() //Initializing recognizer object

Now we will read every image and will store it in a numpy array and correspondingly we will store it with IDs also

recognizer.train(faces, np.array(ids))

recognizer.save('recognizer/train.yml')

These two lines of code will train and save '.yml' file in memory. Here 'face' is the numpy array having all images stored in array form. IDs are also converted to np array which are labels for images.

PREDICTOR : Now, a very simple but important task will be done by this program. This program will predict the labels for frame showing through webcam.

Id, conf = recognizer.predict(gray[y:y+h,x:x+w]) //This line will actually give us User ID predicted that means predicted label gets returned here. And now, we can format it using some opencv tools like drawing on images and putting text in images which will make our model more good looking

And, now here we close our today’s session. Thank you guys for your attention towards my content. Hope it helps you.